Testing as Learning - 5 Insights from Oredev 2025

How the talks at Oredev showed a shift from checking correctness to reducing uncertainty together.

Every time I speak at testing conferences, I’m reminded how much the conversation around testing has evolved, and how much further we still have to go. Earlier this month, at Oredev in Malmö, one of Sweden’s biggest developer conferences, that shift was fully on display.

Of the 100+ speakers, nine focused on testing. From automation and AI to collaboration and communication. What stood out to me was a clear shift: from verifying correctness to enabling learning and collaboration through testing.

Testing as a Conversation

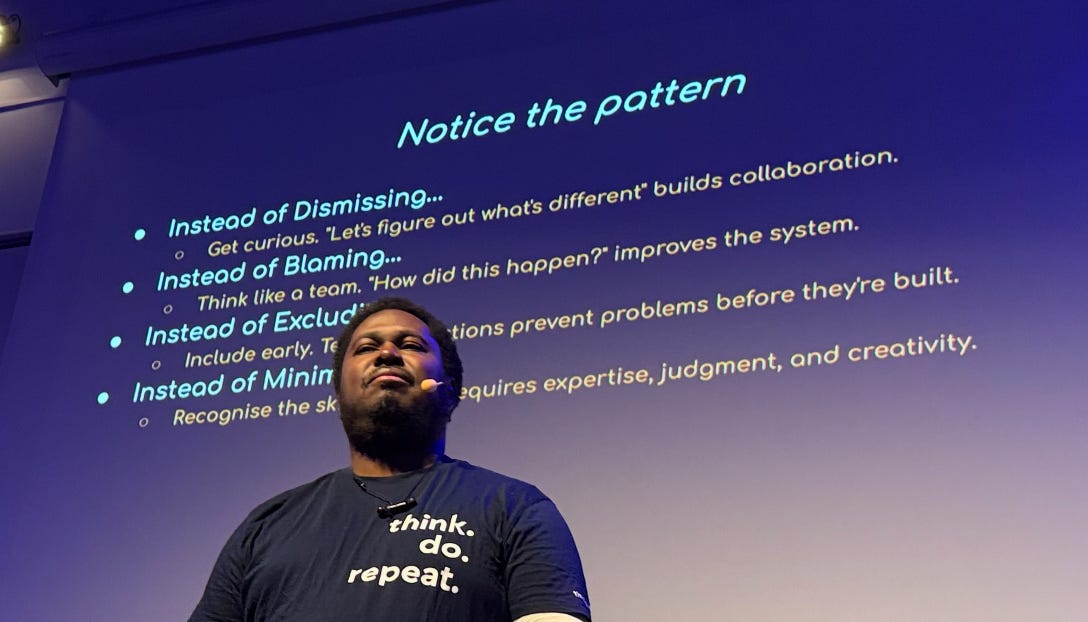

Vernon Richards of The Vernon Richard Show and Yeah but does it work? gave a really entertaining talk about Everything the Tester in your life wants you to know about testing but is too afraid to tell you. He shared truths every tester has heard: “It works on my machine,” “Why don’t we just automate it?” and “Testing is slowing us down.”

But a core point I took away from his talk was that testers and developers complement each other. Testers are often doing the verification that a lot of developers don’t want to do, and developers are building the product that a tester can’t do. We should really be working together, not against each other. Good testing and engineering starts with a shared understanding, not separate roles.

Testing as a conversation shifts the focus from doing the testing to collaborating on testing. So quality becomes a shared outcome we all contribute to, essentially making quality everyone’s responsibility.

Testing Below the UI

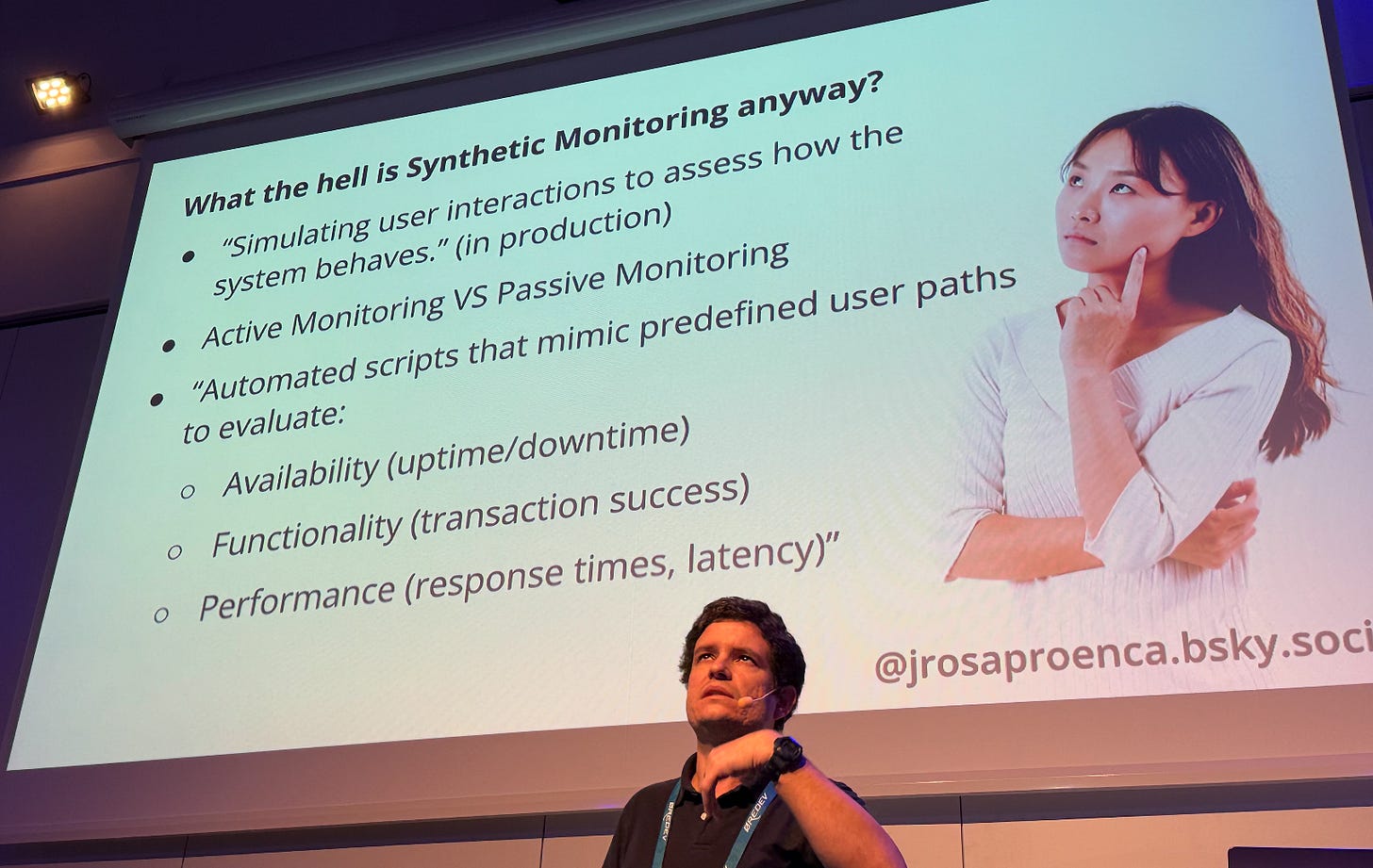

João Proença, a quality engineer at Ada Health, shared how his team uses synthetic monitoring (automated tests in production) to reduce uncertainty about system behaviour. They had relied on end-to-end UI tests but found them too flaky, with false positives slowly eroding confidence.

Switching to API calls made their tests faster, more reliable, and easier to debug - pinpointing problems precisely.

This reminded me of the importance of layers of testing: UI tests that check the interface, API tests that verify the endpoints, and a handful of end-to-end journeys to confirm everything hangs together.

Testing below the UI shifts the focus from “automate everything” to being deliberate about what outcomes automation is meant to achieve.

Testing that Expands Possibility

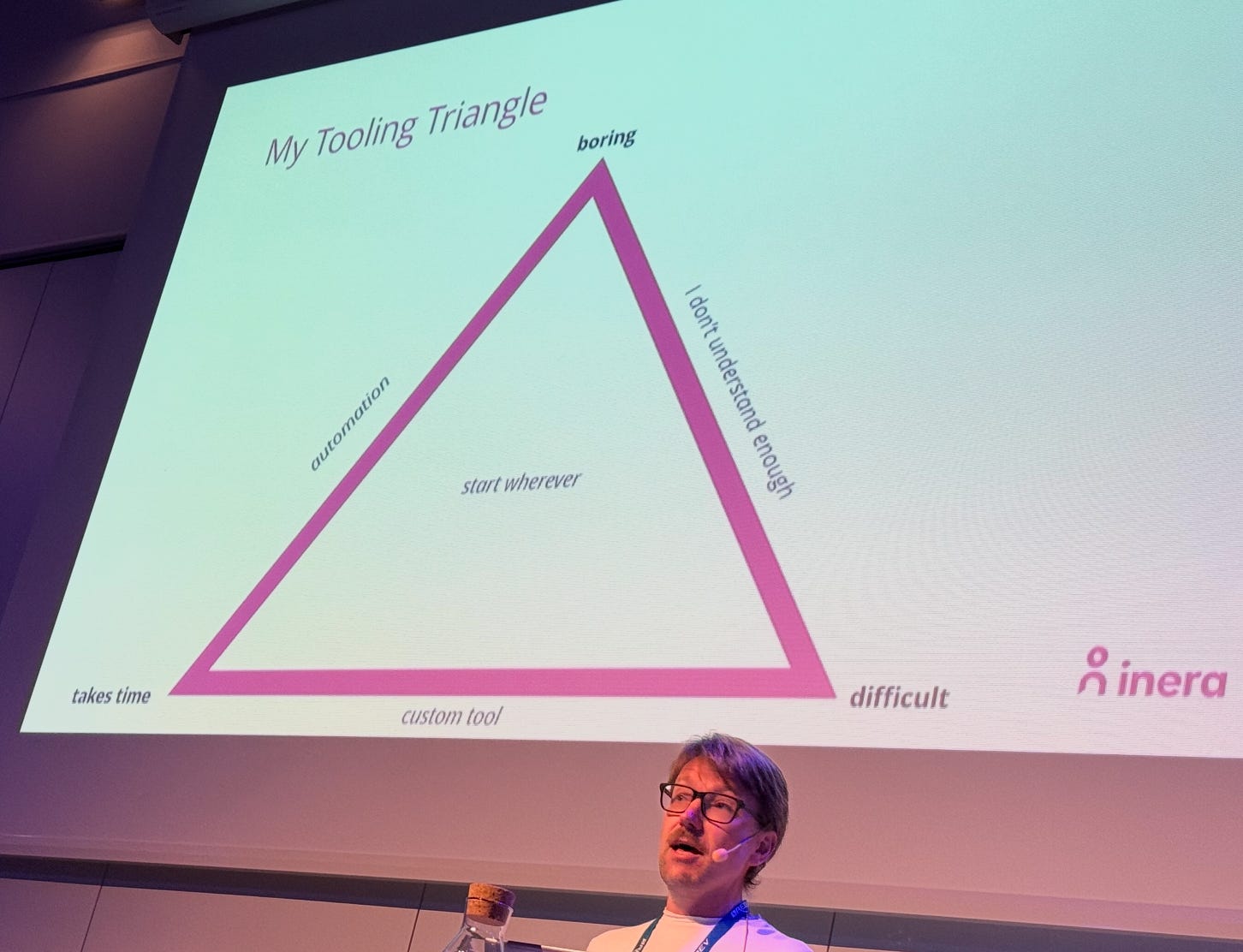

A lot of the conversation around LLM use in testing is often about replacing current approaches with tests generated by agentic agents. But Rikard Edgren’s talk, LLMs for Test Tooling, was different.

He used LLMs (via Cursor) not to go faster, but to go broader. It reflected what Richard Bradshaw has long advocated for: the use of automation in testing. This is where, instead of using automation tools to do the testing, you use them to help you test things you might not have been able to, essentially, tool-assisted testing.

What Rikard has found is that using LLM to help you build new test tools is a great way to do this. This way, you’re still in control and making the judgment call on whether something is a bug, but using the LLM to assist you in testing in ways you couldn’t do before.

He shared a number of different examples:

Test improver - like keeping users logged in for hours or carrying out an action repeatedly over hours or days

Information collector - grabbing data in the background that you might not see in the UI, but buried in API calls

Test visualisation - visualising background API calls to see if things are as expected

Testing that expands possibilities shifts testing from merely exploring the product to exploring the full system. This shift starts getting people thinking about all areas where uncertainty exists and how we can go about lowering it.

If you enjoyed this, I share more reflections like this in my Quality Engineering newsletter. It’s where I explore uncertainty, testing, and how we work together to build better systems. You’re welcome to subscribe and join the conversation.

Testing that Creates Value

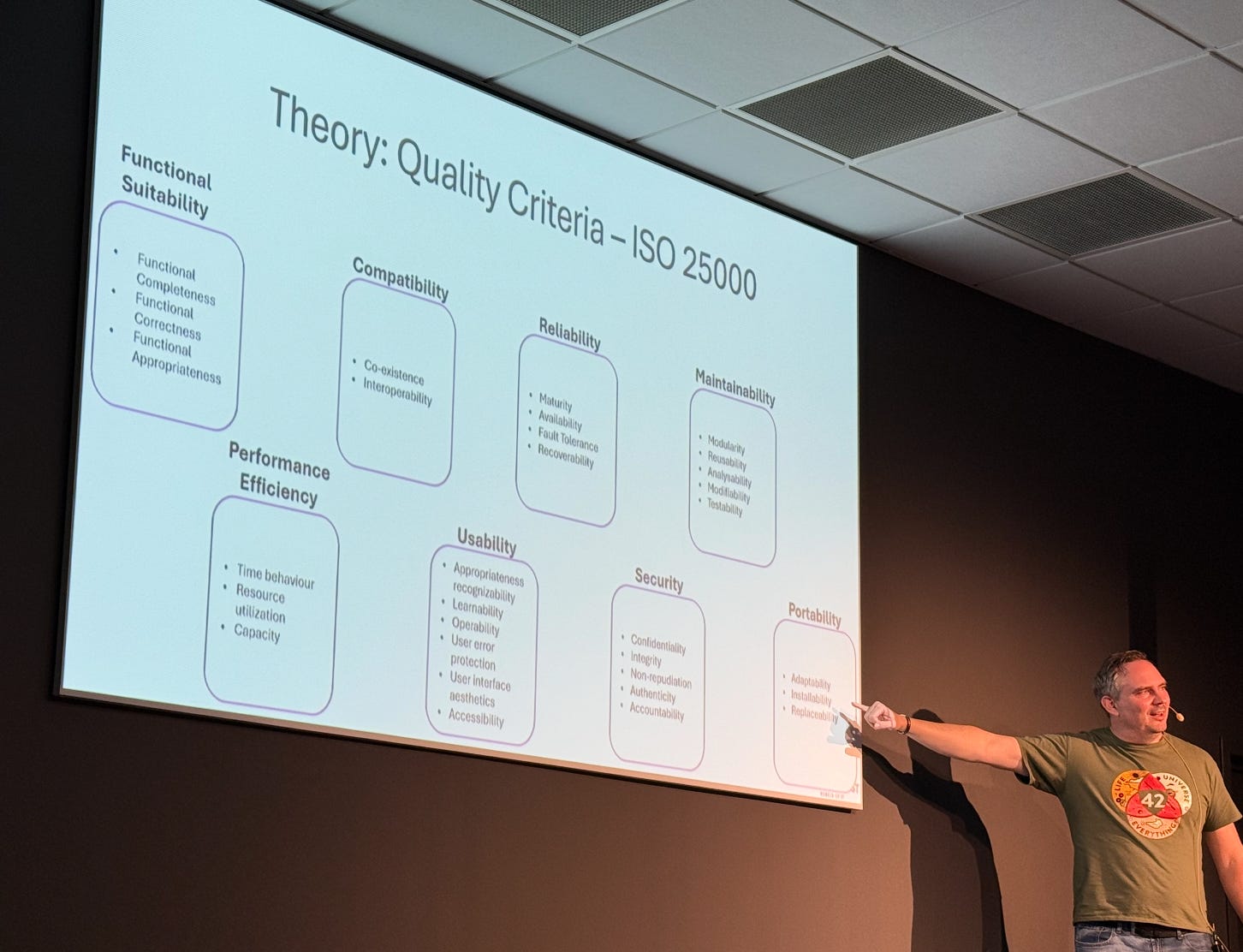

Martin Nilsson’s talk Test Coverage and Prioritisation with Quality Criteria echoes my view that quality is an attribute that is valuable to key stakeholders. He used the ISO 25000 standard’s eight characteristics as his key quality attributes to evaluate his software systems against.

It’s rare to see teams explicitly map what’s valuable to measurable attributes, so it was great to see someone else make that connection.

A takeaway for me was that coverage only matters if it maps to what someone cares about. Who are your stakeholders, and which quality attributes matter to them?

Testing that creates value starts shifting the narrative of testing from metrics that no one cares about (”we executed 100% of our tests”) towards value that matters (”we’re confident that the system will perform as expected under typical user load”). This enables testers and quality engineers to start showing the value their work brings to teams and stops them from being seen as a bottleneck or a cost centre to be eliminated.

Testability is Everywhere

Emma Armstrong’s talk Access Points for Testing really got me thinking. She explored how testability isn’t just about code. It’s in your team’s processes, products, and people. Seeing these as leverage points helps improve how we test across environments, data, hardware, and observability.

One key takeaway was around communication, one of the biggest enablers for helping quality professionals integrate across these areas. Emma reminded me that testability is a system attribute, not a technique, so we need to be specific about what it looks like and how it enables learning.

Emma also made some great book recommendations:

Testability is everywhere helps shifts the perspective that it can be tacked on at the end but something that must be built in at every level of the software development lifecycle. Without it, building quality becomes incredibly difficult and needs all disciplines to advocate for it.

Closing

Across these talks, a pattern appears to emerge: testing is no longer just about finding bugs or proving correctness. It’s about creating feedback loops in code, in teams, in systems. They all shift the conversation from testing and towards quality. It starts to become more about how our work as quality engineers isn’t about testing, automation, or tooling. It’s about how we show up and communicate quality and enabling our engineering teams to build it in - one conversation at a time.

Which of these shifts have you seen in your own work? Or is something different happening in your teams? I’m really interested in what this looks like in practice for others.