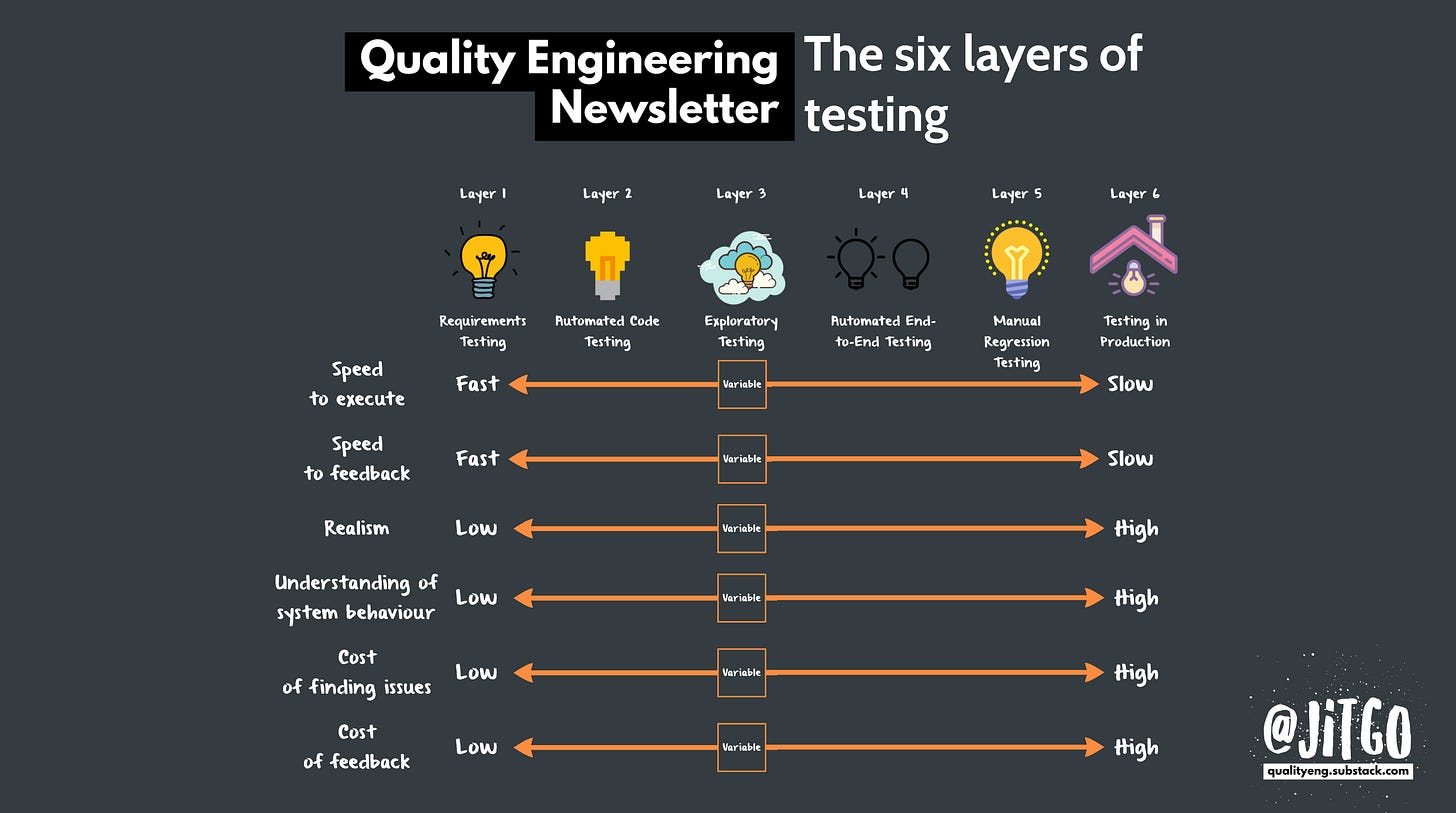

The six layers of testing

The six layers of testing is a mental model representing the fundamental levels of testing and how they interrelate. It highlights the benefits and tradeoffs and encourages collaborative discussions.

The Layers of Testing

I was recently thinking about how I talk about testing when working with engineering teams and realised that I have this mental image. It is an almost ideal state of testing, which happens to be a mixture of the testing pyramid with a few other testing stages thrown in.

Why the Testing Pyramid Isn't Enough

Now, I don't visualise it (mentally or otherwise) as a pyramid, as while it's a good rule of thumb (have more code-level tests than end-to-end tests), it's not all that helpful. People tend to get stuck on what the layers are and how they look for them, e.g. trophies, ice cream cones, honeycombs, hourglass*

* Check out Nick Moore's post on the Qase blog, which has a good history of the test pyramid.

Mental scaffolding

Not only that, but the layers of testing I'm often thinking of can vary from team to team depending on their context, level of skill, or time required to implement tests. As we're discussing testing, I'm mentally visualising the different layers and…

Keep reading with a 7-day free trial

Subscribe to Quality Engineering Newsletter to keep reading this post and get 7 days of free access to the full post archives.