Linky #4 - Improving Quality: Lessons from AI, Heuristics, and Decision-Making

A curated list of thought-provoking reads on quality, AI, and decision-making.

Welcome to the fourth edition of Linky (scroll to the bottom for past issues). In each issue, I'll highlight articles, essays, or books I've recently enjoyed, with some commentary on why they caught my attention and why I think they're worth your time.

This week's Linky No. 4 is packed with thought-provoking reads on quality, decision-making, and AI. From the hidden social impacts of remote work to practical tools for testing in production, plus heuristics to help you navigate complexity, this roundup has something for every quality-minded engineer.

NOTE: This is a long one, so it might be best viewed in a browser.

Latest post from the Quality Engineering Newsletter

AI-Based Automated Testing: Is It Really the Future of Testing?

AI and testing look like a match made in heaven. Engineering teams want their testing tasks sorted, and AI looks more than happy to do it. So can it? This post dives into what LLM-based testing can do for us, and unfortunately, it's not a straight swap.

From the archives

I'm speaking at PeersCon in Nottingham next week, so I'm sharing my three takeaways from last year's conference. This post dives into how to build quality in by focusing on shippable risk, layers of testing and collaborative cultures.

What Is The Future of Working From Home? | BBC World Service

This video highlights different perspectives on remote work that I hadn't considered. For example, there's a disparity between blue-collar and white-collar workers—those without remote work options may begin resenting those who have them, which can even influence voting decisions. Worth a watch to understand the broader societal impact of remote work. Watch on YouTube

Scientist: Measure Twice, Cut Once

This post is a few years old now, but the view of data quality is something to think about:

There's also a more concerning reason not to rely solely on tests to verify correctness: Since software has bugs, given enough time and volume, your data will have bugs, too. Data quality is the measure of how buggy your data is. Data quality problems may cause your system to behave in unexpected ways that are not tested or explicitly part of the specifications. Your users will encounter this bad data, and whatever behavior they see will be what they come to rely on and consider correct. If you don't know how your system works when it encounters this sort of bad data, it's unlikely that you will design and test the new system to behave in the way that matches the legacy behavior. So, while test coverage of a rewritten system is hugely important, how the system behaves with production data as the input is the only true test of its correctness compared to the legacy system's behavior.

They also make an excellent point in that, given a large and complicated enough system, you can't test every permutation of its behaviour. Scientist (a tool originally built for Ruby) allows you to run the old and new behaviour side by side, almost like an A/B test, but both outputs are compared and reported back. This type of testing is often referred to as shadow testing.

This makes it both a testing tool (for comparing new vs. existing behaviour) and a monitoring tool (for observing real-world system behaviour in production).

Scientist is a great way to maintain the quality of your system and build quality in by using the new tests to check the functionality. The git repo is actively maintained, and the blog post explains how it works.

If I had one criticism, it would be the name—'Scientist' doesn't immediately tell you what it does. But what would be a more descriptive alternative?

FS Blog | General Thinking Tools | Occam's Razor

Occam's razor is the intellectual equivalent of "keep it simple."

When faced with competing explanations or solutions, Occam's razor suggests that the correct explanation is most likely the simplest one, making the fewest assumptions.

This doesn't mean the simplest theory is always true, only that it should be preferred until proven otherwise. Sometimes, the truth is complex, and the simplest explanation doesn't account for all the facts.

The key to Occam's Razor is understanding when it works for you and against you. A theory that is too simple will fail to capture reality, and one that is too complex will collapse under its own weight.

Heuristics like "keep it simple" are helpful when working in complex environments, and you don't have enough information to make decisions. They can help you move forward while you get more info to build a picture of what is happening and make more informed decisions. Read on FS Blog.

I’m speaking at PeersCon in Nottingham, UK, on the 13th of March on Speed Vs Quality. Tickets are only £25, and the lineup looks great. If you can make it, come and say hello.

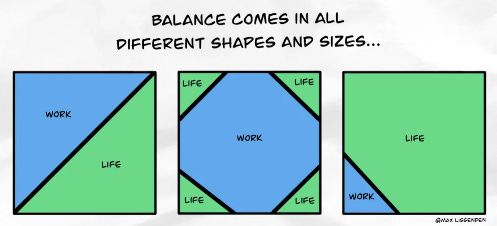

6 Habits to Help You Focus | Food for Thought | Max Lissenden

Default to 'no'.

Establish (and uphold) boundaries.

Respond with this when asked to take on an additional task: "I'm more than happy to assist…However, if we're adding this to my responsibilities, what can we remove?"

Distinguish between what's urgent and what's important. [Learn more about Productivity vs. Impact in Link #2]

Prioritise sleep.

Quit multitasking.

Working at senior individual contributor (IC) levels, or what some call Staff+, often means you work with lots of different teams with autonomy and limited guidance from line managers, so being able to self-manage and figure out what's worth your time can be tricky. These habits could help you do this.

One thing to note is these habits usually depend on the context and scenario in which you work. It's just like using heuristics to guide your decision-making. They help you move forward without getting lost in the weeds. However, as you uncover more information, you will need to adjust your approach and assess if these habits support or constrain you. Read on Thoughts on Minimalism.

Small Amounts of Fine-Tuning Can Make AIs "Evil" | Ethan Mollick

This is a crazy paper that is worth paying attention to. Fine-tuning a big GPT-4o on a small amount of insecure code or even "bad numbers" (like 666) makes them misaligned in almost everything else. They are more likely to start offering misinformation, spouting anti-human values, and talk about admiring dictators. Why is unclear.

The interesting part is how little fine-tuning was needed to do this, which makes you wonder what else is lurking in the data. You could see people trying to hack LLM systems using these techniques. Something to keep in mind if you train your own LLMs for testing specific tasks. Read Ethan Mollick's LinkedIn post.

Building Quality In: A Practical Guide for QA Specialists (and everyone else) | Rob Bowley

The best QA specialists aren't testers; they're quality enablers who shape how software is built, ensuring quality is embedded from the start rather than checked at the end.

It's great to see someone who isn't a QA but a Chief Product & Technology Officer (CTPO) whose perspective closely aligns with quality engineering. It is a great resource if you want people to understand some of the practical things QA can do to start building quality in. I often find it helpful to keep posts like this in your back pocket as it helps to show others that:

Building quality in is not a new idea.

It's a perspective held outside of QA roles, too.

Something that you can share as a reminder of the why, what and how of building quality in.

Read the details on Rob's blog.

Us AI in Areas You're Already An Expert | Ethan Mollick

Start by testing it in areas where you are an expert. Don't ask it to predict the future (it isn't made for that) but ask it for something analytical and research-y that you know, where you can judge its opinion and the quality of the sources it found.

This is exactly how I use generative AI tools—I only trust them in areas where I can verify the results myself. Otherwise, the extra work required to fact-check limits any efficiency gain. This approach highlights how AI can accelerate experts rather than replace them. Read Ethan's full post.

3hr Deep Dive Into LLM for General Audiences | Andrej Karpathy (Founding Member of OpenAI)

This is a general audience deep dive into the Large Language Model (LLM) AI technology that powers ChatGPT and related products. It is covers the full training stack of how the models are developed, along with mental models of how to think about their "psychology", and how to get the best use them in practical applications.

This is on my watch list, but I thought it was worth sharing for people interested in understanding LLMs from first principles. The part that caught my eye was the mental models and how to think about AI "psychology."

This aligns with my takeaway from my last QEN post on AI and testing—the better we understand how these tools work, the better we can leverage them for ourselves and our engineering teams. Watch on YouTube.

Change Is Social | Jonathan Smart

Communicate three more times than you think you need to and you're a third of the way there.

Change is social.

Data, storytelling, incentive (it's a priority, the outcomes are better, the water is warm!).

I especially liked the Diffusion of Innovation curve cartoon (originally by Everett Rogers), which visualises how new ideas spread.

I see Quality Engineering as being in the "Chasm" stage. To gain wider adoption, we need to communicate the benefits, trade-offs, and case studies more effectively. The more social proof we can share, the better. From Jonathan Smart on LinkedIn.

That concludes the fourth Linky. What do you think? Is this format appealing to you? Would you prefer more content like this or something different? Please share your feedback in the comments or reply to this email.