Why we failed at testability

How we went from a fully automated testing approach back to using manual testing

This post is based on a talk about how our team moved to testability and succeeded but ultimately failed to maintain the new way of working. It covers how we got started with testability, what caused us to fail in maintaining the approach and how other teams could do it differently.

Key takeaways:

What we are doing wrong in teams that causes testability to fail

What are the leadership behaviours that enable success with testability

What steps can be taken to maintain testability once established

Please note that this is a long post with quite a few images, so it is best viewed in a browser/substack app rather than your email client, which is likely to truncate the post.

In 2018, I was the test lead for the mobile app team, and my job was to help that team create and implement a test strategy. At that time, I gave a talk titled Mobile Test Automation: Then, Now and Next, which discussed how we built testability into our product. My 2018 talk described how we went from days of manual regression testing to under an hour of what we called automated fast and slow code tests. This enabled this team to release much faster than ever, and everything seemed great.

But by 2020, those fast and slow code tests had stopped being developed, and the team had reverted back to manual regression testing.

This post will cover what happened and how we went from building in testability to losing it all. It will not cover the nitty-gritty of how those automated tests were written. If you're interested in that, let me know, and I can look into making that talk available.

But to briefly recap what did we do back in 2018:

How we got started with Testability

Most teams are pressured to work cheaper, faster, and better with less. As an engineering team, we thought testing was our most significant bottleneck. People needed to actually do the testing, which could take days. Any changes to the system during that time would mean starting over, and still, issues got through to the live environment. Automated UI testing seems like the ideal candidate to solve all these problems. If we can pick up a lot of the work that testers do with automated UI tests, testing would be quicker than a person doing it manually (cheaper and faster). It was also repeatable and consistent, which helps find bugs sooner (better).

What did we learn in 2018

Testers are being wasted in most teams

Testers in most teams are used as click factories to check for regressions when changes are introduced. We learned that one essential type of testing testers did was Exploratory testing. This can be very valuable in finding out what is and isn't working from a whole system perspective and is so much more than bug hunting. However, it is a very expensive regression testing technique.

End-to-end UI automation is not the same as exploratory testing

Automated end-to-end UI tests are an expensive regression testing tool and only give a small amount of value compared to the effort we put into them. Therefore, automated UI tests should be used sparingly - 2 or 3 tests at most.

Small batches and unit tests are the way forward.

We found that small batches of work are the key to releasing quickly. Automated unit tests are really valuable if regressions have been introduced, as they can pinpoint where a module's behaviour has changed. However, if testers are not included in this process, they will likely do more exploratory testing than needed. Unit tests can only help if they've been written well. So, how do you write good unit tests?

Unit tests are the way forward

When we asked the dev team if they write unit tests, they said of course they do and showed us 100s of the tests. But when we asked what do the unit test, test? Well they all had different explanations of what they did. Such as they test each method or each class. It was too hard to test, so we let the testers test it. It's too hard to identify what the unit test covers and some tests checked too much. These responses helped us identify that we needed to build a better understanding of what unit testing is.

Building a team understanding of unit testing

One of the first things we did was stop calling them unit tests and started calling them code tests. This got everyone asking what that meant, which we then started to look into defining.

What are code tests

We set about learning what makes good unit tests as a team by watching some videos *1 and discussing what good unit testing meant to us. This gave us some principles of good unit tests and what type of architecture would support it which led us to hexagonal architecture or ports & adaptors. You can learn more from Ian Coopers TTD, where did it all go wrong. So now we have some principles of what code tests are and an idea of what architecture would support them. We now needed to identify what parts of our system would best suit this testing type.

*1 Two talks that are worth watching are J.B. Rainsberg’s Integrated Tests Are A Scam and Ian Cooper’s TDD, where did it all go wrong.

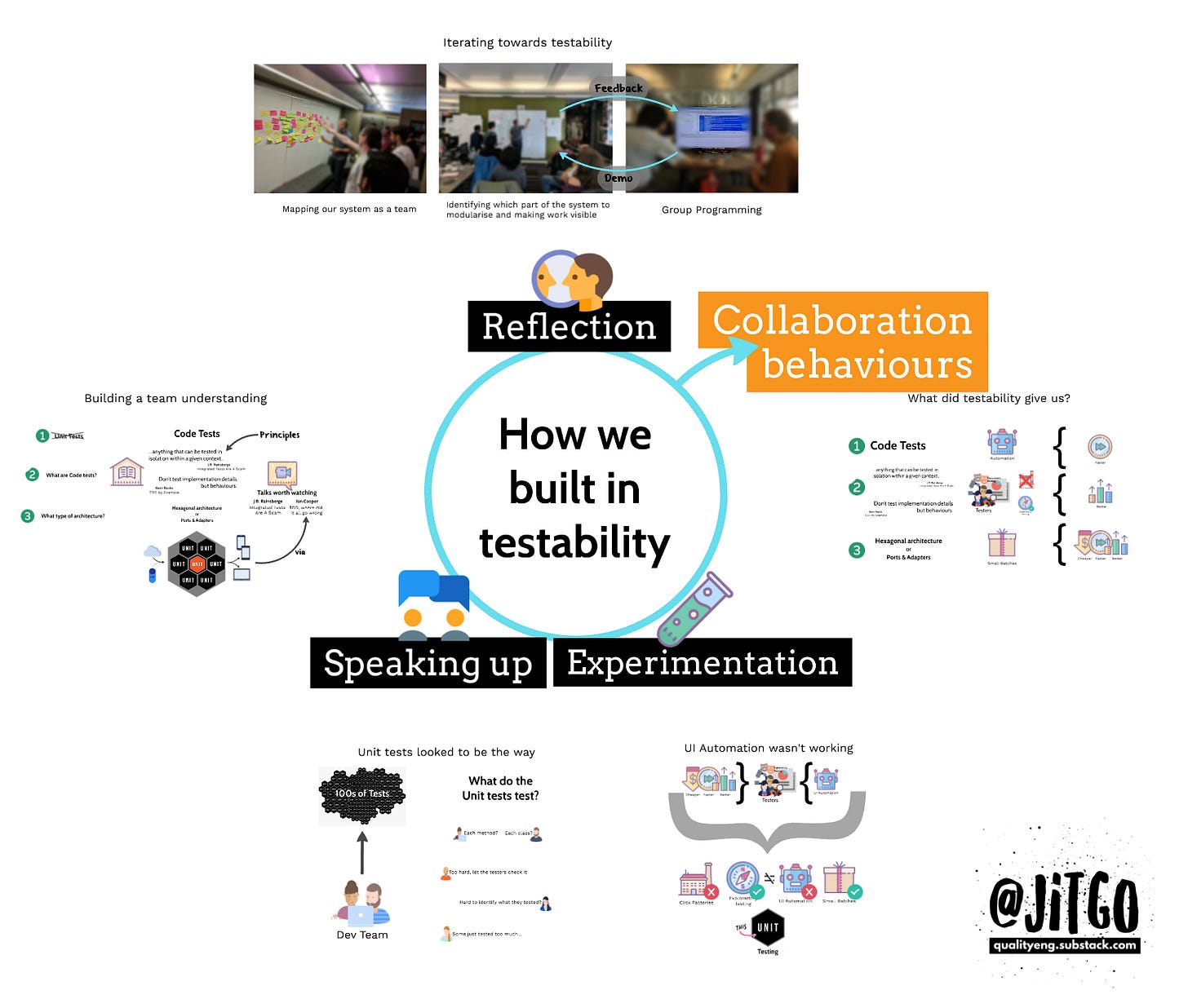

Iterating towards testability

Our first step was mapping our system as a team. This made sure we all had a team understanding of our domain. We then identified which parts of the system to modularise that would be best suited to the testing and architecture we had decided on. Finally, we looked at how we would do this, which for our team was group programming (also known as ensemble or mob programming) seemed like the best fit.

However, we took an experimental approach to writing the tests. Instead of the two developers writing the tests, I (as the tester in the team) would work with them as a trio, and they would code through me. But we would always demo back what we learned to the rest of the team and take whatever feedback they had to make things better.

What did testability give us?

Code tests

Code tests enable us to automate large amounts of our regression testing, significantly reducing the uncertainty that our system's behaviour has changed. This helped us as a team understand if we had built it the right way, which gave us the speed boost we wanted.

Principles of unit testing

The principles we defined helped us build a team understanding of what testability means to us and what it can and can't do. This allowed our testers to focus on high-value activities, not just being click factories but focusing on exploratory testing. Which further reduced our uncertainty about if we had built the right thing. A joint understanding of testability led to a much better and more efficient testing strategy owned by the team, not just testers.

Hexagonal architecture

Hexagonal architecture helped us break down our work into smaller batches that could be built, tested and released independently of all other components. This meant it would be easier to pivot if we needed to potentially make it cheaper in the long run. Smaller batches also meant we were faster at finding out what we built would do what we wanted it to do. Overall, smaller batches were better for our teams and downstream dependencies as we could now objectively show via tests that the system worked as intended.

Key to testability: Collaboration

If you had asked me in 2018 how you could achieve similar results, I would have said to collaborate effectively. But now, with hindsight, I can see what effective collaboration looks like. One of the big things that our dev lead did was encourage us to experiment, speak up, and reflect.

He encouraged us to experiment with our approach to building in testability. He helped us start small, try something, see where it took us, iterate on it if it worked, or pivot and try something else if it didn't.

Experimentation made it safer for us to speak up, share what we knew and agreed with, and share the harder things like what we didn’t agree with, what we didn’t know and mistakes we had made along the way.

Speaking up made it that much more likely that we would reflect on our progress, looking at what worked and what didn’t, what we could do differently next time, and who else we could speak to.

Experimentation, speaking up, and reflecting helped us practice the collaborative behaviours we needed for testability to be effective.

So, what happened for testability to fail?

In 2018, this is where my talk finished, with all of us happily walking off into the sunset with a newfound way to release more value faster and better than before.

So what happened by 2020 that resulted in everything we had done to be reverted to how we stated?