Linky #12 - Confidence, Curiosity, and Cleaning Up Code

From better conversations about quality to AI agents doing the tidy-up

This week’s link covers: The role of confidence in QA conversations, what happens when software systems start testing themselves, and how AI agents might reshape the work of engineers. There’s also a look at why curiosity is a key quality engineering mindset, how we frame quality conversations, and a reminder that writing code has never really been the bottleneck.

Latest post from the Quality Engineering Newsletter

I've been asking questions on LinkedIn recently and one I asked was: "If you could only change one thing about how your team talks about QA what would it be?" This sparked off quite a lively debate. I wrote up some of the responses in my latest post, "What if We Talked About Confidence in QA," covering themes around building quality in, the importance of value and outcomes, confidence vs. information, the ongoing relevance of bugs, and the problem with the term "QA." Some great perspectives were shared, and the original LinkedIn thread is worth a skim through too.

Some great sessions at this year’s Agile Manchester covered a wide range of topics. What stood out for me: using the Jobs-to-be-Done framework to understand real user needs, managing how our work is perceived, and leaning into uncertainty to turn what we’ve got into what we want.

Three Qualities to Forster as a QE

Three qualities that have nothing to do with talent or intelligence, but can make a dramatic impact on your results:

Cheerful. You are pleasant to work with and generally raise the level of energy in the room.

Accountable. You feel personally responsible for what you want to accomplish. It is not someone else's job. It's your job.

Adaptable. You can find alternate paths to success. You don't need things to be a certain way to be happy."

Accountable and adaptable make sense, but I've not thought of cheerful before. It makes sense when called out like this, as being pleasant to work with is always going to serve you well. Via 3-2-1: On becoming hard to copy, the power of fundamentals, and three qualities that matter - James Clear

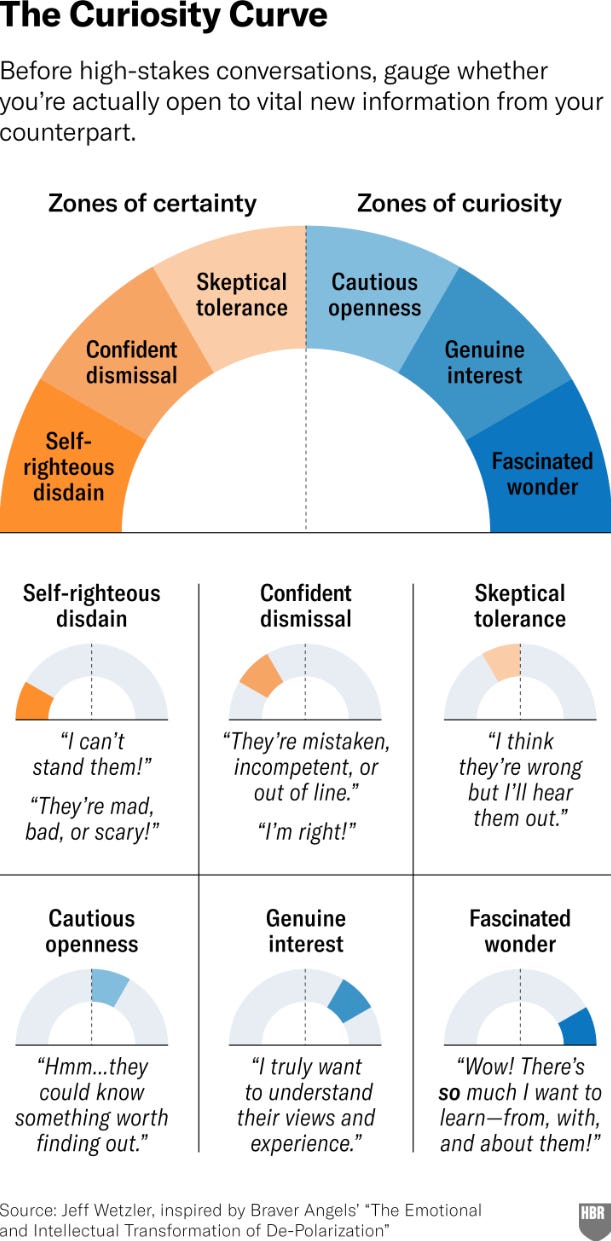

The Curiosity Curve

Curiosity increases your ability to process new information and respond creatively to complex problems. It activates the brain’s learning and reward centers, increasing your capacity for insight and creative problem-solving. Curious individuals demonstrate greater resilience when facing unexpected information,

For a quality engineer, curiosity is a superpower, and entering into a conversation from a curiosity perspective is likely to lead to much better outcomes. So, before heading into a conversation with a team, check where you are on the curve and ask yourself a few questions to nudge you a place or two to the right. Via The Right Way to Prepare for a High-Stakes Conversation

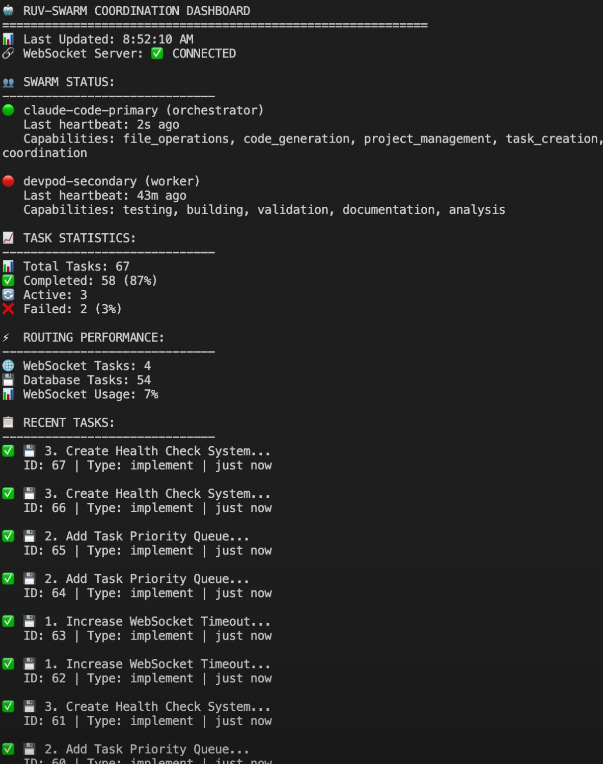

What Happens When Software Systems Can Test Themselves?

This is a thought experiment, so I’m suspending any scepticism and going with the idea that this is true…

Background: Igor was running an AI swarm experiment. After the AI set up the swarm, he wanted proof that it worked:

𝗠𝗲 → "Prove me that this is working"

𝗦𝘄𝗮𝗿𝗺 → 𝘛𝘩𝘪𝘯𝘬𝘪𝘯𝘨...

𝗦𝘄𝗮𝗿𝗺 → "𝘚𝘶𝘳𝘦 𝘵𝘩𝘪𝘯𝘨. 𝘐'𝘭𝘭 𝘪𝘮𝘱𝘭𝘦𝘮𝘦𝘯𝘵 𝘺𝘰𝘶 𝘢 𝘥𝘢𝘴𝘩𝘣𝘰𝘢𝘳𝘥 𝘸𝘩𝘦𝘳𝘦 𝘺𝘰𝘶 𝘤𝘢𝘯 𝘮𝘰𝘯𝘪𝘵𝘰𝘳 𝘮𝘺 𝘱𝘳𝘰𝘨𝘳𝘦𝘴𝘴 𝘢𝘯𝘥 𝘴𝘵𝘢𝘵𝘶𝘴"

Now, let’s assume that the dashboard works and isn’t all fake (Kent Beck, a few weeks ago, mentioned how he was using TDD with an AI project, and in some instances, it would just delete the test to make them pass). If you can simply ask the system to demonstrate to you that it works, do we still need QA, testing, or even quality engineering? Well, looking at this dashboard example, I’d say yes. While this indicates that things are up and running, it provides no insight into how well it’s running or whether it's even valuable to know. That still needs further understanding of the system and what quality attributes matter to your stakeholders. Now, that’s not to say you couldn’t ask the AI system to show you those things. But you still need to do the work to find out what a healthy running system looks like, who your stakeholders are, and what they value. If we further assume that future software is built by AI with people guiding it, then the role is going to shift from doing to inspecting. In that world, understanding how quality is created, maintained, and lost will be very valuable in helping to build resilient and robust software systems. Via "Prove it's working." The swarm's response changed everything. | Igor Moochnick

Using Agents to Clean Up Your Code

I was chatting to Kent Beck today about how agent swarm programming is more like dev manager work sped up than developer work, while my Claude-flow swarm spent 20 minutes or so completely refactoring my Swift code base and tidying it up. As Kent says, Tidy First, before adding more functionality. With a few minor fixes to report back as I built it in Xcode, it worked fine afterwards. One view went from 1100 lines of code to 350. This is the second full refactor I’ve done. I find that using a single instance of Claude to make fixes and changes tends to add code in the views and build up cruft, while a swarm builds much better structured code.

The video in this post is mesmerising to watch. One alternative future I can see is developers working more as orchestrators (Adrian called them engineering managers) of multiple agents. Ones to write code, ones to clean it up, ones to document the work, maybe even ones to test the code too. This could mean that you might still want human exploratory testers to provide feedback on what the system is like for people to use, and quality engineers (possibly the same individuals) working with development teams to advise on the types of “quality” agents they might want to deploy. I wonder if we’ll ever look back to pre-LLM times and wonder how we used to get work done? Similar to how people wonder what it was like building software before the internet. Via Adrian Cockcroft on LinkedIn

Framing Quality Conversations: Destructive vs. Collaborative

Using a more positive view around "confirming this is shippable" rather than looking to be destructive and protect from a change is great when your context is closer to engineers, looking to be pragmatic and agile/velocity-focused. It aligns with how engineers think about testing and helps them to see you as a force for keeping up the team's momentum (especially in CI/CD).

The protective bug-hunting view of testing works better in a slow pace of change, possibly regulated, industry where mistakes are costly and cannot be reversed out easily. However, using this approach in the wrong context can give you a reputation for being adversarial and having too high of a bar for quality.

Callum’s comments here really got me thinking about how destructive testing and coming from that perspective tends to reinforce the “us versus them” ideology. Devs build it and testers destroy it. While I agree "testers find bugs in products" is a core part of the role, how we frame that work and the narratives we use set the perspective of how we will work with the rest of the team. Are you coming in as an adversary or as the person who's helping to create a better product? That’s not to say you stop doing those things or you now sugarcoat your feedback so it doesn't hurt developers/product owners feelings, but to be more aligned with what the team is actually trying to do and the outcome they are trying to achieve, and helping them see if they’re achieving that or not. Via Callum Akehurst-Ryan on LinkedIn

Writing the Code Was Never the Bottleneck

For years, I’ve felt that writing lines of code was never the bottleneck in software engineering.

The actual bottlenecks were, and still are, code reviews, knowledge transfer through mentoring and pairing, testing, debugging, and the human overhead of coordination and communication. All of this wrapped inside the labyrinth of tickets, planning meetings, and agile rituals.

As Pedro mentions in his post, AI accelerates the lines of code being produced. While you can use techniques to lower those lines, it still doesn’t stop the overhead of making sense of all that code and sharing that knowledge. We will still need to build quality into our products, processes, and people, and as the rate of change within software increases, we will need to do so faster than ever before. Via Writing Code Was Never The Bottleneck - ordep.dev

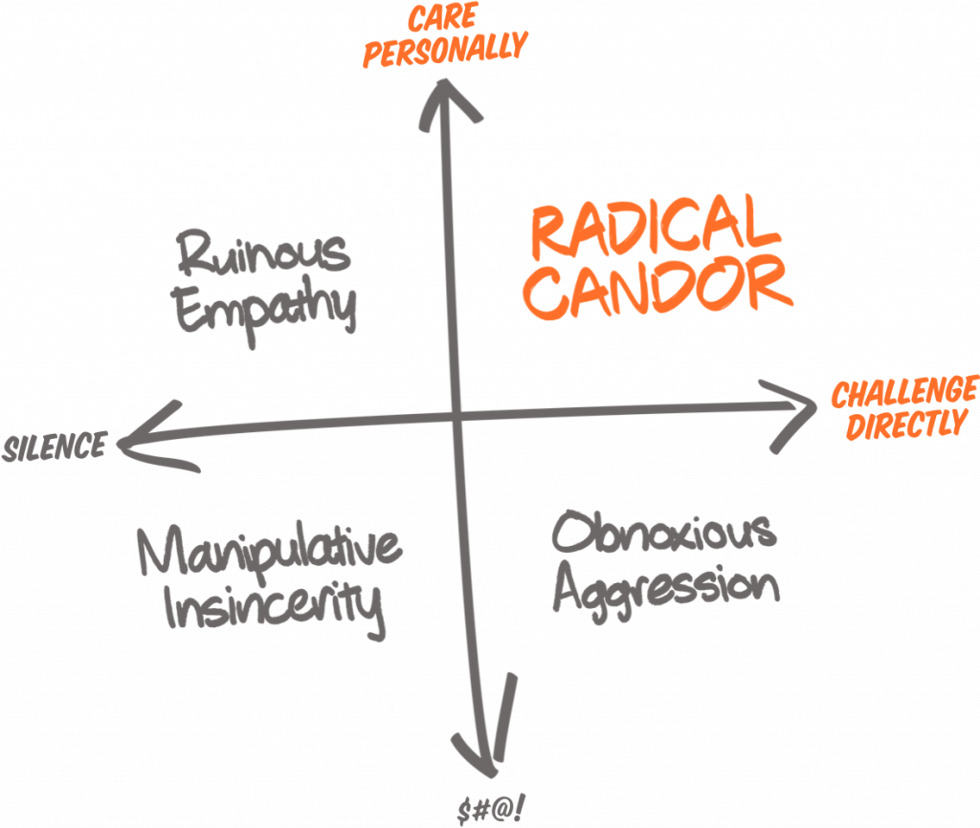

Disagreeable givers are respectfully candid

I'm thinking about 𝘿𝙞𝙨𝙖𝙜𝙧𝙚𝙚𝙖𝙗𝙡𝙚 𝙂𝙞𝙫𝙚𝙧𝙨 again.

Specifically:

∙Disagreeable ≠ Difficult.

∙Challenger ≠ Contrarian.

∙Givers who push back? Good to have around.They sound a lot like Testers to me.

We’re here to probe.

To question assumptions.

We’re not hired to nod politely.

We're here to make things better, even if it makes people squirm.Sounds great eh? Heroic even.

Here's the trouble:

∙Do it well, you look like a legend.

∙Do it badly, you look like a dickhead.

∙Do it 𝙑𝙀𝙍𝙔 badly, and it can be... career-limiting 😳

Vernon shared a great post on LinkedIn and reminded me of the disagreeable givers that was coined by Adam Grant in his TED talk Are you a giver or a taker?. He says agreeable givers are the people who say yes to everything, but disagreeable givers are those who have the best interest of others at heart but are willing to give tough feedback that's often hard to hear.

This reminds me of Kim Scott's Radical Candour framework. Where agreeable givers fall into the ruinous empathy quadrant, and disagreeable givers are the radically candid. Doing it well is where you're able to care personally and challenge directly, but doing it badly is where you start to lose the caring personally aspect and drop into the obnoxious, aggressive side.

Combining these two concepts (givers and candidness) can really help quality engineers steer towards the legendary side of the Vernon scale. Personally, I prefer respectfully candid over radically candid as I feel people take the radical part too far and end up becoming the dick-head in the team. Via I'm thinking about 𝘿𝙞𝙨𝙖𝙜𝙧𝙚𝙚𝙖𝙗𝙡𝙚 𝙂𝙞𝙫𝙚𝙧𝙨 again. | Vernon Richards

Let me know which links resonated or if you’ve read anything lately that should make the next Linky. Always up for a good share.

Past Linky Editions

Linky #11 - Tools Don’t Think. Engineers Do.

This week’s link covers: Reflections from Agile Manchester, how good habits shape team behaviour, why LLMs need context, not just prompts, what makes testing meaningful, and how metrics shape (or warp) team incentives