How software systems fail: Part 3a - People

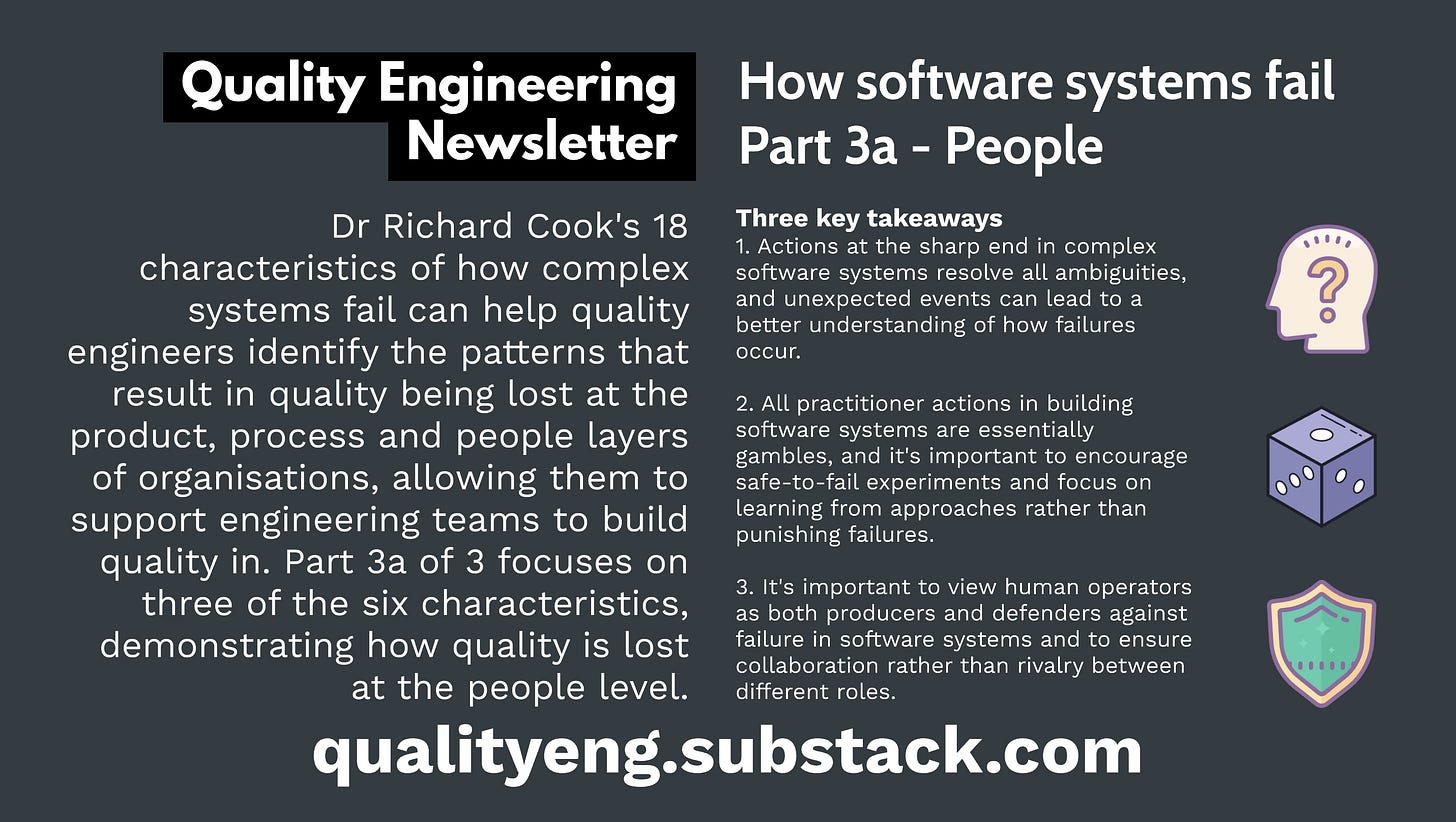

Dr Richard Cook's 18 characteristics of complex systems failure applied to software. Part 3a of 3 focuses on three of the six characteristics, demonstrating how quality is lost at the people level.

Key insight

Failure resolves all ambiguities within complex software systems and highlights how software system creators' actions are gambles that often have dual roles as defenders and producers of failures.

Three key takeaways

Actions at the sharp end in complex software systems resolve all ambiguities, and unexpected events can lead to a better understanding of how failures occur.

All practitioner actions in building software systems are essentially gambles, and it's important to encourage safe-to-fail experiments and focus on learning from approaches rather than punishing failures.

It's important to view human operators as both producers and defenders against failure in software systems and to ensure collaboration rather than rivalry between different roles.

Keep reading with a 7-day free trial

Subscribe to Quality Engineering Newsletter to keep reading this post and get 7 days of free access to the full post archives.