Linky #22 - Capability Beats Heroics

Automation enablement, flow, and decision-making under pressure.

This week’s Linky has one theme running through it: capability beats heroics. Whether it’s automation, flow, team dynamics, or decision-making, the same lesson keeps showing up. If you want speed and quality, you build the foundations that make good outcomes repeatable.

Latest post from the Quality Engineering Newsletter

For one of my final posts for 2025, I dived into the most popular themes of the year from QEN. If I was to try and sum it up, people are looking to understand how to engineer quality into systems and what approaches help them do that. This post pulls out which posts from QEN helped people figure some of that out.

From the archive

With the theme of 2025 being about learning how to engineer quality into systems it reminded me of my post from Oct 2023 that looks into some of the techniques that help quality engineers build quality into products, processes, and people. A key takeaway from this post:

Adopting a systems thinking view of the team allows Quality Engineers to balance the short-term gains (building quality into products) with long-term benefits (building quality into the people) while helping the team connect both (building quality into the process). Taking this approach gives teams a legacy that outlasts even them.

Build test automation capability

Robert’s team approach was focused on delivery, so they first got core journeys in place and stable, then slowly built only the paths through the system that made sense, not every single one. They then tested at different layers, focusing on where the problems reside, so making changes to the system is safer.

They also switched the automation team to be an enablement team (Team Topologies pattern), to enable other teams to build out their own tests, not own it for them. And critically, pipeline health was top of the priority list. This is what helps you ship and must be stable, reliable, and resilient. Simple yet very effective.

If you’re looking for an automation strategy, this is it. Copy and profit via Stop Building Test Automation Teams | LinkedIn | Robert Meaney

I thought they were bored

I’ve given a lot of talks over the years and I usually gauged how well the talk was going by the looks on the faces of the audience. Recently people looked, well, bored.

💡That’s when I got it.

They weren’t bored.

That was their concentration face.🧐

Turns out they were thinking. Just goes to show your first impressions are not always the best. Via I’ve given a lot of talks this year | LinkedIn | Jit Gosai

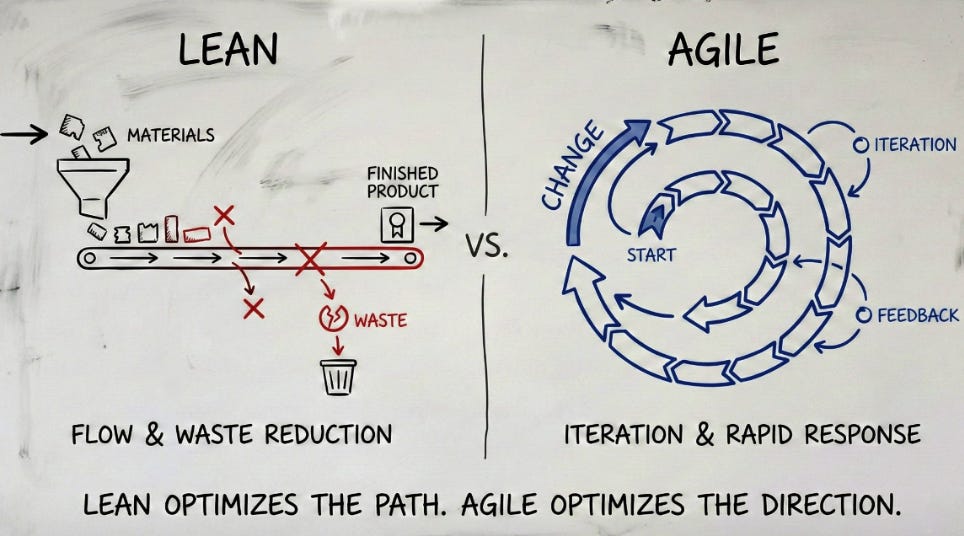

Difference between lean and agile

Lean Management (The Philosophy of Efficiency)

Lean originated in manufacturing (Toyota Production System) and is rooted in maximizing customer value by minimizing waste.

Core Focus: Identifying and eliminating Muda (waste) in all its forms: overproduction, waiting, unnecessary inventory, defects, etc., to create a smooth, efficient, predictable value stream (”flow”).

Key Question: “Is this step adding value for the end customer?” If the answer is no, eliminate it.

Strategic Goal: Achieve efficiency, stability, and predictability in delivery.

Agile Management (The Philosophy of Adaptability)

Agile, born from the need for flexibility in the digital world, is centered on responding to change over following a rigid, detailed plan.

Core Focus: Iterative delivery, continuous feedback from the customer, and constant collaboration. Delivering functional value in small, manageable increments.

Key Question: “How quickly can we incorporate new market information or pivot strategy?”

Strategic Goal: Achieve effectiveness, flexibility, and rapid market validation.

🔑 The Strategic Takeaway

The two philosophies are complementary, not competing:

Lean provides the process discipline needed to make your work efficient. (Do things right).

Agile provides the flexibility needed to ensure you are building the right thing. (Do the right things).

One core skill I think every Quality Engineer needs is a solid grounding in lean and agile software delivery practices. These practices have really helped me understand how to build quality into team processes and helps me work with teams to understand where they can be doing things differently. The thing is, people can talk about them interchangeably, but as the infographic above shows, they are different and can be used in complementary ways. Via Lean Vs Agile | LinkedIn | Omar Abdelfatah

Tests as micro tools

...In the early days of the industry, testing used to favour a gut feel driven approach. Traditional development handed code to testers who lacked system understanding and had to guess how things worked. Test-driven development (TDD) transformed testing from guesswork into an engineering discipline by embedding understanding directly into test suites which were made out of thousands of small, contextual tests that captured the system model and domain knowledge.

What might be less obvious is that those small tests are in fact highly contextual tools i.e. they have inputs and outputs and they are applied to a specific problem (the test scenario).

We should be applying those same practices to development by starting with the development experience and using composable micro tools...

I’d never thought of tests as micro tools before, but they are highly contextualised and tell you something very specific about the code under test.

Something interesting Simon Wardley says is that testing is an engineering practice. I’m not sure exactly what he means, but my current understanding is that testing is what helps you build your system (think TDD), hence it’s a practice that helps you engineer code. Via Software engineering is evolving into agentic engineering | LinkedIn | Simon Wardley

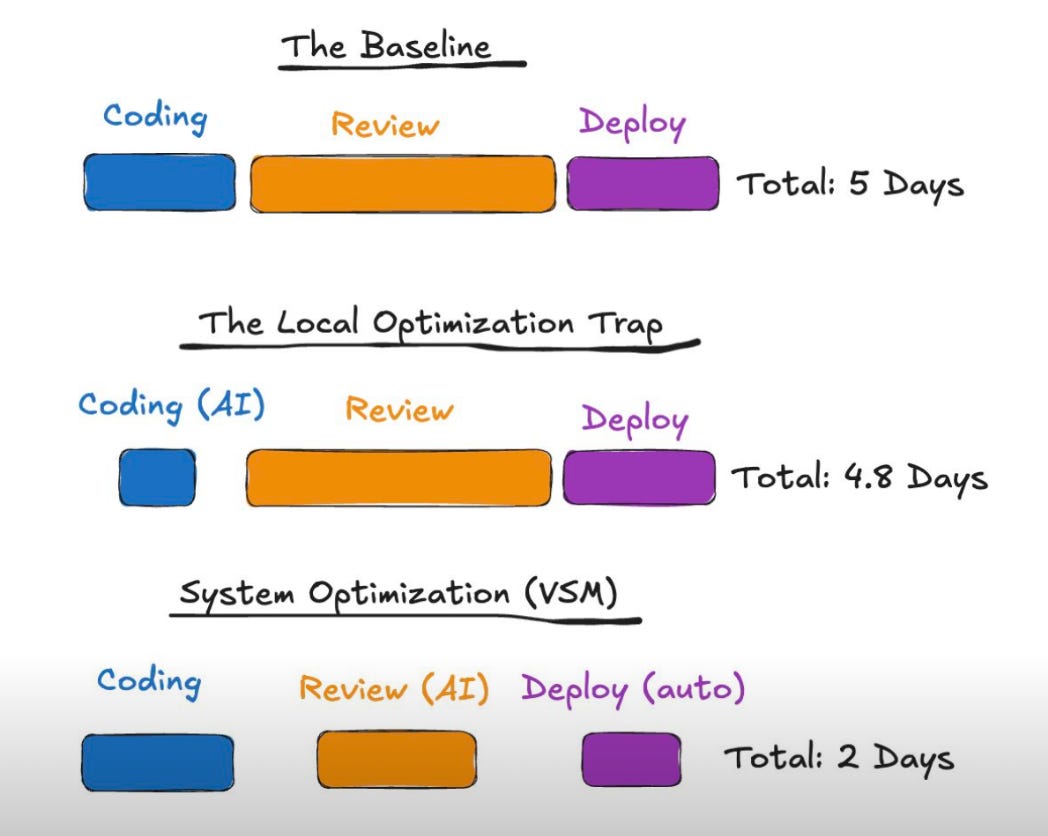

Theory of constraints in action

This is Theory of Constraints in action. Identify your bottleneck, then work to optimise that constraint. Once improved, check to see what the new constraint is and start the process over. I walk through this step-by-step in my faster deliverying teams kill the test column talk.

The author has called identifying your constraints Value Stream Management (VSM). However you do it, this is a great way to focus your efforts on improving your ability to deliver value, not just doing more work. Via We are obsessed with optimizing the 5 minutes of coding | LinkedIn | Ville Aaltonen

Agile Leads sound like quality engineers

But let’s be honest, entire careers were built on ceremonies, playbooks, and theory 🧹

That era is over.

Enter the Agile Lead 🦸🏾

Less about doing Agile...

Much more about being agile and delivering outcomes ✅This is a role that asks:

• Where is delivery breaking down? 🧩

• What is blocking flow? 🚧

• Which behaviours are holding the organisation back? 🧠

These questions all remind me of what a quality engineer does to build quality into the process. Via Agile Lead: Delivering Value, Not Just Frameworks | LinkedIn | Sanches Clarke

LLM can sway opinions

How good is ChatGPT at changing your mind?

And the short answer is: yes, AI can meaningfully shift political attitudes, and importantly we’re finally seeing how.

With a billion people using AI tools daily, we all need to understand the implications of this. Democracy will be impacted by AI... the only open questions are how much, how quickly, and through what mechanisms.

This study offers some surprisingly clear answers.

What didn’t matter: model size.

We seem to have hit a point where bigger models aren’t meaningfully better at persuasion. Effectively, the models we already had 6–12 months ago were plenty capable of moving political opinions.

What did matter (sometimes dramatically) were post-training choices and prompting strategies.

Let’s break those down.

Reward-model persuasion tuning.

One of the most powerful interventions was fine-tuning models using a reward model that literally optimizes for persuasive output.This alone increased persuasion by up to 50%+ in some conditions, basically by learning which outputs people find compelling.

Prompting techniques

The study compared several prompting strategies, including: Debate prompts,

Storytelling prompts, Deep canvassing prompts, and Normative/“mega-norms” prompts.The clear winner was Information-rich prompts, flooding the conversation with relevant claims and facts, increasing persuasion by up to 27%.

(Interestingly, this approach also introduced more inaccuracies - a tradeoff worth paying attention to.)

Overall takeaway: This is the clearest demonstration yet that conversational AI can meaningfully shift political attitudes.

Something QEs should keep in mind. If we’re using LLMs to help us make decisions, then we need to really look at who created the model and how we’re prompting it. This becomes more interesting when we incorporate these models into our systems for user interaction. We could optimise the system to sway users more towards our products. This could have very interesting regulatory implications for businesses going forward. Via Huge New AI Persuasion Study | LinkedIn | Samuel Salzer

You've got to go slow to go fast

Rob Bowley has been running a lot this year and has slowly seen his fitness improve, which reminded him:

Software development works the same way. We like to celebrate bursts of speed, but if you want a team that reliably gets things done, the answer is not more heroics. The real progress comes from the quiet, sustained work that raises a team’s baseline.

This is why we need to improve the environment in which the work occurs, not just the processes and techniques. By gradually improving the system, we can achieve much more sustained progress rather than quick fixes that last only in the short term, often incurring unforeseen technical debt. Via Consistency Trumps Heroics in Software Development | LinkedIn | Rob Bowley

Don’t build skyscrapers on sand

What I did at a previous company was that any technical debt made for product reasons was called “product enablement.” That had to be repaid before product could iterate on what we built. The rationale was this:

we needed to ship fast (speed)

it doesn’t have to be perfect because we don’t know if we’re going to keep the feature.

if we do keep the feature, we have to tighten up the foundation before we iterate on it. We won’t build skyscrapers on sand.

Things like flakey tests isn’t debt. It’s a papercut.

You’re not hemorrhaging yet, but it slows you down, and you don’t want to die by death of a thousand papercuts. If you want speed, you have to address the issues that prevent speed. We try to address papercut regularly, every cycle. But we dedicate whole cycles to papercuts about once a quarter, honestly. It’s great for when folks start taking PTO and half your team is out.

I really like the idea of recording a technical debt item as product enablement. This way, it’s managed like any other item on the backlog and doesn’t get delayed or put off like tech debt items can sometimes.

And the Reddit poster is right, flaky tests are bugs and should be fixed as such. No one writes a flaky test on purpose, it comes about after the fact.

But I’m not too keen on dedicating whole sprints to bug fixing, because that sprint doesn’t deliver any business value (only engineering value), and leadership begins to resent it. Little and often is best here, but I get that it’s not always possible. Just try to avoid it becoming a habit.

A good heuristic here is that tech debt is like fitness. You can’t skip the gym, healthy eating, and good sleep for a month and then try to catch up on it all in a whole week. The benefits accumulate little and often over time. If you find yourself skipping healthy habits, then look at why you had to skip them and address that problem. That’s what continuous improvement is all about: improving your capability to do the work, not just working harder doing the same thing. Via After 7 years at the same org, I’ve started rejecting “Tech Debt” tickets without a repayment date. | Reddit

The true test is how teams perform under pressure

The difference isn’t about tooling or process maturity. It’s about how the team works together under pressure. I’ve always found that pressure reveals the true characteristics of a team. And the AI era is no different–DORA’s data makes that clear. AI doesn’t fix team dynamics, it amplifies them. Strong collaboration, clear ownership, and healthy engineering culture are all critical. Without those, AI just adds noise.

It’s when the pressure is on that the true characteristics of a team, or any system, show up. Because it’s in those moments that all the theatre of looking like you’re collaborative, high performing, and harmonious drops and the team’s true character emerges.

It’s the same for people as it is for a business. It’s what people do when things go wrong that tells you how good they really are. And it’s leadership that sets that tone in those moments. If leaders are stressed and pointing fingers, then your team will be too. Culture always flows from the top. Via 7 Team Archetypes in the AI Era | LinkedIn | Rob Zuber

Sometimes you end up in the wrong column and it gets deleted

Layoffs suck as they are not personal and do not take into account how you performed. You end up just being in the wrong column in an excel sheet that got deleted and there is nothing no one can do about it. And I think that makes it harder to deal with cause there is nothing you can do to fix it or to change it. There is no one to blame, no-one at fault. So you just become one of the unlucky ones and you have to leave the job and team and company you love.

Debbie led the Playwright community and bridged the gap between the community and Microsoft. I came across this post via Qambar’s LinkedIn post, but this point really stood out to me. It’s happened to me, and it’s happening right now to others, too. It’s hardly ever personal, but we have a tendency to take it that way. As Debbie says, talking about it helps. Debbie’s full post: Laid off from my dream job, what now?

Plan continuation bias

Plan continuation bias is the cognitive, emotional and social tendency to stick with an established plan even when new information or changing conditions mean it no longer makes sense, is unlikely to deliver value, or increases risk to intolerable levels.

It’s amplified by confirmation bias, workload and pressure, ever higher sunk costs and proximity to the goal, symbolic goal fixation, power dynamics, and the myriad interpersonal risks of challenging the plan; and it often leads individuals and teams to press on long after they should adapt or stop.

I’ve seen this in teams and even in myself. For me, it’s the embarrassment of saying I chose wrong and worrying what others will think. I know I shouldn’t, but it’s always there in the back of my mind.

There must be a better way to deal with it, but so far, it’s been mustering the courage to say I made a mistake. Over time, it does get easier to say I failed, but the fear is still there. Via Plan continuation bias is... | LinkedIn | Tom Geraghty

That’s it for my last weekly Linky of 2025. Next week I’m planning a “Linky of the Year” roundup: the posts I keep coming back to, and the patterns I think they point to for quality engineering in 2026.

Past Linky Posts

Linky #21 - How Quality Emerges

This week I found myself returning to a simple but uncomfortable idea: most of the quality we experience in software is a side effect of how our organisations think, learn, and make decisions. Not the tests we write or the tools we use, but the behaviours we reinforce, the mistakes we learn from, and the language we speak when we raise issues.

Linky #20 - Quality Isn't Magic

This week’s links are all around the same idea: quality isn’t magic, it’s how we make choices. Like how we give feedback. How we talk about roles like tester and QE. How we build trust in our systems. And how we use AI without letting it quietly pile up future debt. Nothing here is flashy, it’s the everyday behaviours that shapes how our teams learn and build better software.

Linky #19 - From Shift-Up to Showing Up

In this week’s linky, we look past the language of shifts and frameworks to focus on the work that matters: connecting with people, handling change safely, and practising what we learn every day. Real quality starts with how we show up for it.

The automation enablement framing is spot-on. I've seen teams try to centralize test ownership and it always bottlenecks, whereas enabling other teams to own their own test infrastructure scales way better. The Theory of Constraints callout is also key, too many orgs optimize the wrong parts of the pipeline and wonder why throughput doesnt improve. I had a similar realization when we spent months speeding up build times but the actual constraint was in PR review latency, shifting focus there doubled our delivery rate.